Approximate Bayesian Optimisation for Neural Networks

By Nadhir Hassen & Irina Rish in Research Journal of Machine Learning

July 10, 2021

Abstract

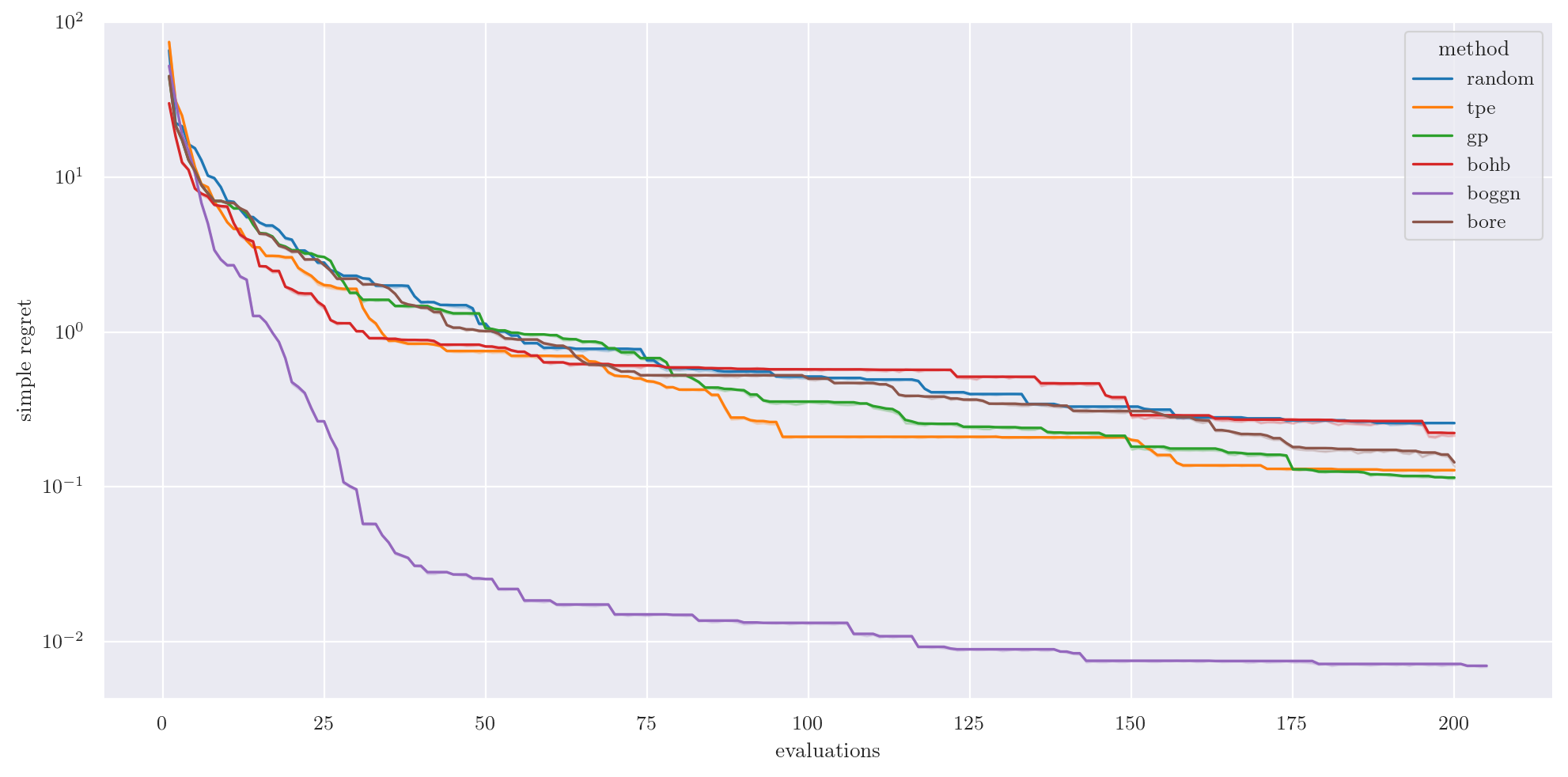

A body of work has been done to automate machine learning algorithms and to highlight the importance of model choice. Automating the process of choosing the best forecasting model and its corresponding parameters can result to improve a wide range of real-world applications. Bayesian optimisation (BO) uses a black-box optimisation methods to propose solutions according to an exploration-exploitation trade-off criterion through acquisition functions. BO framework imposes two key ingredients: a probabilistic surrogate model that consists of prior belief of the unknown dynamic of the model and an objective function that describes how optimal the model-fit. Choosing the best model and its associated hyperparameters can be very expensive, and is typically fit using Gaussian processes (GPs). However, since GPs scale cubically with the number of observations, it has been challenging to handle objectives whose optimisation requires many evaluations. In addition, most real-datasets are non-stationary which makes idealistic assumptions on surrogate models. The necessity to solve the analytical tractability and the computational feasibility in a stochastic fashion enables to ensure the efficiency and the applicability of Bayesian optimisation. In this paper we explore the use of approximate inference with Bayesian Neural Networks as an alternative to GPs to model distributions over functions. Our contribution is to provide a link between density-ratio estimation and class probability estimation based on approximate inference, this reformulation provides algorithm efficiency and tractability.

Automate Machine Learning

Improve Hyperparameter Learning with Approximate Bayesian Optimization.

- Posted on:

- July 10, 2021

- Length:

- 2 minute read, 236 words

- Categories:

- Research Journal of Machine Learning